History of Sound in Theatre

Early Sound

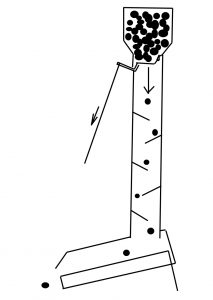

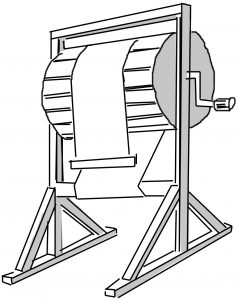

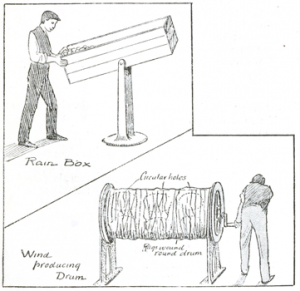

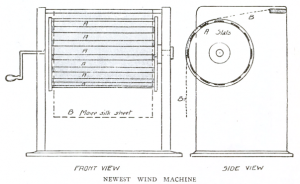

Around 3000BC, China and India accompanied their theatrical productions with incorporated music and sound, and we know of examples of sound usage throughout the history of theatre. In Greek tragedies and comedies the productions call for storms, earthquakes, and thunder when gods appear. There is extensive history of the machinery that was used scenically; and even though there are only a few mentions of it, there were also uses of machinery in place for the few sound effects they needed. In the Roman theatre, Heron of Alexandria invented a thunder machine using brass balls that would drop onto dried hides arranged like a kettledrum, and a wind machine with fabric draped over a rotating wheel.

Roman Empire

During the Roman Empire, Aristotle noted that the chorus could be heard better on a hard surface rather than when they stood on sand or straw, beginning the understanding of reflection and absorption for audience cognition. You could say he was the first theatre acoustician. Because the Greeks also had an understanding of how sound traveled to an audience with their stepped seating structure, in the 1st century BC, Vitruvius, a Roman architect, used the Greek structure to build new theatres, but he had a deeper understanding of sound as he was known to be the first to claim that sound travels in waves like a ripples after a stone is thrown in water. His work was instrumental in creating the basis of modern architectural acoustic design.

Medieval and Jacobean

Sound effects were needed for the depiction of hell and the appearance of God in religious plays, the tools of drums and stones in reverberant machinery held over from the Greek theatre. And of course, both sung and instrumental music played a big part of medieval plays for both transitions and ambience. In Elizabethan theatre, audiences expected more realism in their entertainment and sound effects, and music begins to be written into texts. As theatre was moving indoors and becoming more professional, sound and music were used to create atmosphere, reproducing pistols, clocks, horses, fanfares, or alarms; but also sound was now being used for symbolic effect of the supernatural and to help create drama. A description of sound effects is listed in A Dictionary of Stage Directions in English Drama 1580-1642, which includes everything from simple effects to specific needs for battle scenes.

For a short time after Shakespeare’s death until 1660, theatre declined in England, and after the English Civil War began in 1642, theatre was forbidden. When King Charles was restored to the throne after the war, theatres began to come alive again in part because the King, while exiled in France, was accustomed to seeing proscenium-designed theatre. Shortly after this, the first theatres were built in America but they did not survive for more than a few years at a time. It was not until the early 1800’s that theatres in New York, Philadelphia, St. Louis, Chicago, and San Francisco continuously operated.

17th – 18th – 19th Century of Sound

The advent of mechanical devices being developed within the realm of sound effects and sound design in the building of thunder runs (cannonball rolled through chutes), thunder sheets, wind and rain makers. The Bristol Old Vic recently re-activated their ‘thunder run’ for their 250th anniversary (to see a video). These devices are also highly developed to be cued by an equivalent to an SM in the time, and have a large dedicated “sound crew” to a type of Sound Designer or Director guided to be executed.

Victorian Age and the Use of Recorded Sound

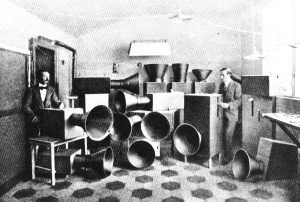

In Michael Booth’s book Theatre in the Victorian Age, there is documentation of the first use of recorded sound in theatre; a phonograph playing a baby’s cry was heard in a London theatre in 1890. In Theatre Magazine in 1906, there are two photographs showing the recording of sound effects into the horn of a gramophone for use in Stephen Phillips’ tragedy Nero.

The first practical sound recording and reproduction device was the mechanical phonograph cylinder, invented by Thomas Edison in 1877

The first practical sound recording and reproduction device was the mechanical phonograph cylinder, invented by Thomas Edison in 1877

Bertolt Brecht cites a play about Rasputin written in 1927 by Alexej Tolstoi that includes a recording of Lenin’s voice. And sound design began to evolve even further, as long-playing records were able to hold more content.

Unconventional Sound

In 1913, Italian Futurist composer Luigi Russolo built a sound-making device called the intonarumori. This mechanical tool simulated both natural and manmade sound effects for Futurist theatrical and musical performances. He wrote a treatise titled The Art of Noises, which was written as a manifesto in which he attacks old presentations of classical instruments and advocates the tearing down of the classical structure and presentational methods of the music formats of his time. This could be intimated as the next stage of the use of unconventional instruments to simulate sound effects and classical instrumentation.

Foley in Theatre

Eventually, the scratchiness of recordings was replaced with a crew of effects people for better sound quality. Circa 1930, the American company, Cleon Throckmorton Inc., stated in an advertisement that they would build-to-order machinery to produce sound effects saying, “every theatre should have its thunder and wind apparatus.” At that time a thunder sheet cost around $7 and a 14” drum wind machine cost around $15. This is during the Great Depression when ticket sales ranged from $.25-$1.

And in the NY Herald on August 27, 1944, a cartoon depicting Foley artists backstage at the Broadhurst theatre performing the sound effects for Ten Little Indians shows 4 men operating the machinery used to create surf, a boat motor, wind, and something heavy dropping.

Hollywood Comes to Broadway

The field begins to grow when Hollywood directors such as Garson Kanin and Arthur Penn, start directing Broadway productions in the 1950’s. Because they had transitioned from silent films to ‘talkies,’ they had become accustomed to a department of people in the position of authority regarding sound and music. Theatre had not yet developed this field; there were no designers of recorded sound. It would normally fall upon the stage manager to find the sound effects that the director wanted, and an electrician would play the recordings for performances. In time, because savvier audiences could distinguish between recorded and live sounds, creating live backstage effects remained common practice for decades.

First Recognitions

The first people to receive the credit for Sound Design were Prue Williams and David Collison, for the theatrical season in 1959 at London’s Lyric Theatre Hammersmith. The first men to receive the title of Sound Designer on Broadway was Jack Mann in 1961 for his work on Show Girl and Abe Jacob who negotiated his title for Jesus Christ Superstar in 1971. And the first person noted as Sound Designer in regional US theatre is Dan Dugan in 1968 at the American Conservatory Theatre in San Francisco. As the technology of recording and playback of music and sound advances, so does the career of the sound designer.

The Advance of Technology

You cannot move forward in telling the history of the field of sound design without pointing out the technological advances. Technologically, someone needed to be in charge of the growing use of equipment and knowledge it took to make sound design work. But I would be remiss if I did not state that artistically technology makes choices easier to accomplish, because a designer still has to take into account story, character, emotions, environments, style, genre, and apply tools of music, psychology, and acoustics in order to impress upon an audience and bring them on an emotional sonic journey.

Let’s take a look at how technology advances changed how audio was recorded and played back.

- By the end of the 1950’s, long-playing records were replaced with reel-to-reel tape, and with that came the possibility of amending content more easily than pressing another disc.

- Dolby Noise Reduction (DNR) was introduced in 1966 and became an industry standard to a cleaner sounding quality of recorded music.

- By the end of the 1970’s cassette tapes outnumbered all disc and tape usage. It was easy to amend content but difficult to use as playback as you would line up cassettes to be played one by one.

- By the end of the 1980’s the compact disc and Digital Audio Tape (DAT) became the popular mode of playback, with the new auto-cue feature with DAT that made it possible to play a cue and have the tape stop before the next cue.

- In 1992 Sony came out with the MiniDisc player (MD) and theatres immediately picked it up for playback. You could amend content quickly on your computer, burn it to a CD, transfer it to the MD, rename and reorder the cues on the disc, and it too would pause at the end of a cue.

Sound designers could now work during technical rehearsals. This is one of the major shifting points in the artistry of the profession because sound designers were legitimized as collaborators now that they were able to work in the theatre with everyone else. This is not to say that sound designers were not artistic and valuable to the process as they recorded and amended their work alone; this points out that others could now see a sound designer at work in the room, and with this knowledge came understanding.

First Contracts in the US

Between 1980 and 1988, United States Institute for Theatre Technology’s (USITT) first Sound Design Commission sought to define the duties, standards, responsibilities, and procedures of a theatre sound designer in North America. A document was drawn up and provided to both the Associated Designers of Canada (ADC) and David Goodman at the Florida United Scenic Artists (USA829) local, as both organizations were about to represent sound designers in the 1990’s and needed to understand contract language of expectations. USA829 did not adopt the contract until 2006 when unionization happened and accepted sound designers. Before this sound designers worked with Letters of Agreement.

MIDI and Show Control

In the 1980’s and 1990’s, Musical Instrument Digital Interface (MIDI) and digital technology helped sound design grow very quickly. And eventually computerized sound systems in a theatre were essential for live show control. The largest contributor to MIDI Show Control (MSC) specifications came from Walt Disney who utilized systems to control their Disney-MGM Studios theme park in 1989. In 1990 Charlie Richmond headed the USITT MIDI Forum, a group that included worldwide developers and designers from theatre sound and lighting. They created a show control standard, and in 1991 the MIDI Manufacturers Association (MMA) and Japan MIDI Standards Committee (JMSC) ratified the specifications they laid out. Utilization of the MSC specifications was first used in Disney’s Magic Kingdom Parade at Walt Disney World in 1991.

Moving this technology into theatres took years to adopt because of the lack of capability, finance, and the challenges with the scale of computerizing a system. With MIDI control, you could now use a sampler for playback. Your files would be uploaded into a storage unit and through a MIDI command you could trigger a specific file from a musical keyboard. These machines became smaller as digital storage space shrunk, but eventually, Level Control Systems (LCS), Cricket, SFX, and QLab quickly were becoming the standards in theatre show control, the newest making an easier interface than the last. They eventually made all older formats: record players, reel-to-reel tape, cassette tape, compact disc, mini disc, and samplers obsolete for usage in theatre.

Now, in the 21st century, sound designers can be faster in tech than other elements for the first time. Content can be amended in any dreamed-up fashion and within minutes it can be ready to work with the actors on stage. This is a very fast advance in the field and no other stagecraft element has grown so quickly in so short a time, becoming a valued artistic component of professional theatre.

Union Representation, Tony Awards, and TSDCA

In 2008 after years of campaigning by USA829, who now solidly represent sound designers in their union, the Tony Award Administration Committee added two awards for sound with Mic Pool winning the first Best Sound in a Play award for The 39 Steps and Scott Lehrer winning the first Best Sound in a Musical award for South Pacific. However, in 2014, only six years later, the Tony Award Administration Committee announced that both awards would be eliminated.

Questions about the legitimate artistry, and how to properly judge sound design, were a catalyst for the formation of The Theatrical Sound Designers and Composers Association (TSDCA). TSDCA was formed in 2015 to help educate the theater-going public, as well as to support and advocate for the members of the field.

One could argue that with the advances in technology, the artistry of sound design is taken for granted because it is not as magical as it once seemed when theatre artists created the impossible in the room. Or perhaps it’s that as a species we have all become accustomed to technology and it no longer holds any mystery. Either way, the artistry of sound design is still is an integral part to how human beings communicate their stories.

How Sound Works

Sound Waves

Sound waves emanate from an object, which is in contact with a medium of transmission, and eventually is perceived as sound. We usually think of sound traveling through air as the most common medium, but sound waves can also pass through solids and liquids at varying speeds. The human ear has a very intricate system of intercepting these sound waves and brings meaning to them through the auditory nerves in the brain. This produces the second meaning when we speak of sound, a more physical and emotional sensation of hearing, which is how the sound feels, such as “the crickets at night are very peaceful.” This page will focus on the first meaning, the actual transmission of the sound wave and how the minute variations fluctuate above and below atmospheric pressure causing waves of compression and rarefaction.

Inverse Square Law

The inverse square law which states that the further a sound wave travels away from the point of actuation, the less the intensity of pressure change occurs. To human ears, this to us sounds as if the sound is getting quieter when in reality the atmospheric changes are getting smaller with distance and time. Over time, the energy dissipates. At the point of emanation, the energy of the sound occupies more physical space. As it travels, and we encounter the sound at a single point of reception in a broader area, it appears to be quieter. Outdoors, with no reflecting or absorbing objects, sound will behave in accordance with the inverse square law; this condition is known as a free field. There is nothing interrupting the flow of energy from the point of emanation to the point of reception.

Wave Cycle

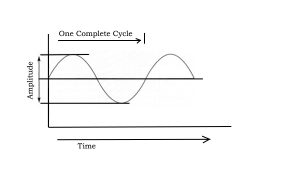

A sound wave cycle is determined by measuring the initial increase in atmospheric pressure from the steady state, followed by the corresponding drop below the steady state, and then the return back up to the steady state. The sound wave is in reality squeezing together atmospheric particles more than normal and then is pulling them apart further than normal. When you factor the rate of this into the equation, the faster the object vibrates the more wave cycles are produced per second. The amount of wave cycles that occur in one second is expressed as the frequency of the wave. The greater the number of vibrations, we define as a higher frequency of a wave cycle, which gives the perception of being higher in pitch. The fewer the number of vibrations, we define as a lower frequency of wave cycle, giving the perception of being lower in pitch.

Frequency

Frequency is relative to pitch in our perception, but pitch is a subjective sensation. You may not be able to hear a change in pitch with a very small change in the frequency of a sound wave. These frequencies were named after Heinrich Hertz, and the term Hertz (Hz) measures the wave cycles per second. If the frequency is over 1000 Hz, we specify this with the suffix kiloHertz (kHz). For example, a sound wave measuring a frequency of 15,500 cycles per second would be notated as 15.5 kHz. Perception of these waves varies from person to person, taking into account age and gender. The perception of the lowest audible frequency is around 15 Hz. In the perception of the higher frequencies is where you see the greater variance. Young women tend to have a higher range of hearing, up to 20 kHz, when the average high range is around 15-18 kHz. With age, and exposure to high decibel levels of sound pressure, the ability to hear high frequencies declines. Sound pressure levels are measured with the decibel scale sound pressure level (dBspl).

In this diagram of the sinusoidal (sine) wave above, the steady state is the line in the center. The initial compression of atmospheric pressure takes the wave above that steady state. The corresponding decline below the steady state is the rarefaction of the atmospheric pressure. One full period is noted when the pressure comes back up again to the steady state. Assuming an impulse sound, Amplitude, or the height of the waveform, will change through the amount of change in pressure over time. At the start of a wave, you’ll find a greater distance from the steady state, and with time, in a free field, the size of the pressure diminishes until negligible and the amplitude (height) is smaller. To the human ear, Amplitude equates to loudness.

Sound Bounce and Absorption

Sound travels through, around, and bounces off anything that is in the path of the wave; every object with mass, with which a sound wave comes into contact, will vibrate as well. This vibration of another object through sound is considered the resonant frequency of that particular object. When sound bounces off of an object it is considered the reflection, which is similar to the way light bounces off of a mirror. Light particles are very small in comparison to sound waves, so especially low frequency waves (larger in size) will only reflect off of certain sized objects. The size of the object will determine the frequencies that will flow around and reflect off of it. If an object is between you and the source of the sound wave, the object creates a shadow of itself between you and the source, and diminished perception of frequencies occurs because of the absorption and reflection that object causes. Harder reflective objects act as rebound for the wave, and softer absorbing objects soak in some of the energy of the wave.

Reverberation

When sound bounces off of ceilings, floors, and walls, the reflections combine with the original wave and have different effects on a listener depending on where they interact with the sound. The closer you are to the source of the original wave, the less you will hear the reflected waves. The further away you are from the original source, the more the combined effect is apparent and can at times obscure the original sound wave. What is called the critical distance is the point where the energy from the original source equals the energy from the reflections. This can vary according to the acoustic conditions of the space in which the sound is traveling. When you reach the point in the space outside of the critical distance where the reflected sounds diffuse the energy of the original source, this is called the reverberant field, and you will lose clarity in the effect with a longer reverberant time. This is not to be confused with an echo, which is when sound waves bounce from a highly reflective surface and repeat.

Sound Designers understand the way sound is generated, moves, reacts, and dissipates in any environment. It is the foundation to the understanding of what happens when human beings come into contact with sound.

How We Hear

Excerpt from The Art of Theatrical Sound Design – A Practical Guide by Victoria Deiorio

Biology, Physics, and Psychology

When we recreate life or dream up a new version of our existence, as we do in theatre, we rely on the emotional response of an audience to further our intention of why we wanted to create it in the first place. Therefore, we must understand how what we do affects a human body sensorily. And in order to understand human reaction to sound we have to go back to the beginning of life on the planet and how hearing came to be.

The first eukaryotic life forms (a term that is characterized by organisms with well-defined cells) sensed vibration. Vibration sensitivity is one of the very first sensory systems that began with the externalization of proteins that created small mobile hairs that we now call cilia. This helped the earliest life forms to move around, and transformed them from passive to active organisms. The hairs moved in a way that created a sensory system that could detect the changes in the movement of the fluid in which they lived.

At first this was helpful because it ascertained the indication of predators or prey. Eventually an organism could detect its environment at a distance because of the vibration it felt through the surrounding fluid; essentially they were interpreting the vibration through the sense of touch on the cilia. To go from this simple system to the complexity of the human ear is quite an evolutionary jump that took billions of years to create, but we cannot deny the link.

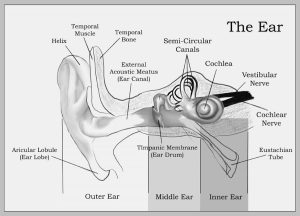

Now if we look at the human ear, it is an organ that is equipped to perceive differences in atmospheric pressure caused by alternations in air pressure. The inner ear contains the cochlea, a snail shaped spiral structure that is filled with fluid to aid in balance and hearing. In the fluid-filled spiral there are mechanosensing organelles of hair cells about 10-50 micrometers in length. These hairs capture high and low frequencies depending on their placement within the cochlea (higher frequencies at the start, and lower frequencies further inward of the spiral). They convert mechanical and pressure stimuli into electric stimuli that then travel along via the cochlear aqueduct, which contains cerebrospinal fluid, to the subarachnoid space in the central nervous system.

Simply put, sound enters the ear; vibrations are detected in the fluid by cilia and are converted into perception at the brain. You may also want to take note that we use this same process of capturing vibrations and creating an electrical signal, to then be interpreted through different ‘brains’ of technology as our signal flow of the mechanical side of our work as sound designers. Our ears are the best example of organic signal flow.

Sound can be split between two aspects, physics and psychology. In physics we don’t remap every vibration on a one-to-one basis. That would be too overwhelming for us to interpret.

There are two innate conditions to our hearing.

- Firstly, we have a specific range of frequencies within which we hear. We don’t hear the highest frequencies that a bat produces when flying around on a quiet rural evening. And a train rumble to us is not nearly as violent as it is for an elephant in a city zoo that uses infrasonic (extreme low end frequency) communication.

- Secondly, from our perceptible frequencies we selectively choose what we want to hear so that the aural world around us is not overpowering. When new meaning is not being introduced, we selectively choose to filter out what we don’t need to hear.

This brings us to the aspect of psychology though perception and psychophysics, the study of the relationship between stimuli, the sensations, and the perceptions evoked by those stimuli.

Perception

The first step to the development and evolution of the mind is the activation of psychophysics in the brain. If you have the understanding of what you are experiencing around you, you can apply that understanding to learn and grow. This is how the mind evolves. And in our evolution our minds developed a complex way to interpret vibrations and frequency into meaning and emotion.

Everything in our world vibrates because where there is energy there is a vibratory region, some more complex than others but never totally silent. The brain seeks patterns and is continually identifying the correlation of sensation with perception of the energy that hits our bodies at a constant rate. When the brain interprets repetitive vibration, it creates the neural pathway of understanding around that vibration, which is why we can selectively block out sound we don’t need to hear, i.e. the hum of fluorescent lights or the whir of a computer. But when a random pattern occurs like a loud noise behind us, we immediately adjust our focus to comprehend what made that sound, where it is in juxtaposition to us, and question if we are in danger.

Hearing is a fast processing system. Not only can the hair cells in your ears pinpoint vibrations, and specific points of phase of a vibration (up to 5,000 times per second), it can also hear changes to that vibration 200 times per second at a perceptual level. It takes a thousandth of a second to perceive the vibration in the inner ear, a few milliseconds later the brain has determined where the sound is coming from, and it hits the auditory cortex where we perceive meaning of the sound in less than 50 milliseconds from when the vibration hit the outer ear.

The link between visual and sound is an important factor to our work as sound designers. At the auditory cortex we identify tone and comprehension of speech, but it is not just for sound. There are links between sound and vision in sections of the brain to help with comprehension. For example, the auditory cortex can also help with recognizing familiar faces that accompany familiar voices.

The more we learn about the science of the brain, the more physical interconnectedness we find between the sensory perception functions. This is why when sound in theatre matches the visual aspects of what is seen, it can be an incredibly satisfying experience. It is the recreation of life itself and when it is precisely repeated, it is as though we are actually living what is being presented in the production.

What is fascinating about the perception of hearing is that it does not solely occur in the auditory parts of the brain, it projects to the limbic system as well. The limbic system controls the physical functions of heart rate and blood pressure, but also cognitive function such as memory formation, attention span, and emotional response. It is why music can not only change your heart rate, but also bring you back in time to a specific moment that contained detailed emotions. This link to the limbic system is the aspect of cognitive hearing we use the most when placing music into a theatrical performance to support what is happening emotionally on stage.

Philosophy of How We Hear

As a sound designer, you need to know what the collective conscious experience is with sound. Any experience that human beings encounter can be reproduced on stage either in direct mirroring or a metaphoric symbol. Although this happens at the physical level of re-creation, often because it is a dramatized telling of a story, the reproduction lives within the metaphysical level of experience.

In order to understand the difference between the physical and metaphysical experience of sound, we must use Phenomenology (a philosophy which deals with consciousness, thought, and experience). When we are able to break apart the experience of hearing sound to master control over it, we can use it as a tool to affect others. You want to learn sound’s constitution in order to be able to control it.

Most theatregoers do not recognize the sound that accompanies a performance unless they are specifically guided to notice it. We provide the perception of depth of the moment-to-moment reality by filling in the gaps of where the visual leaves off. In order to recreate true to life experiences, we supply the aural world in which the event exists.

If we do not normally take note of how we process sound in our lives and its existence in our experiences, we will not be able to ascertain its presence in a theatrical production as an audience member. And for most people, sound in theatre will be imperceptible because their ears are not tuned to know how they perceive sound. We, as sound designers, want to use that to our advantage. But we can only do that if we completely understand how human beings process sound.

Manipulative Usage Because of Evolution

In a theatrical environment the audience is listening with goal-directed attention, which focuses the sensory and cognitive skill on a limited set of inputs. Therefore, we as sound designers have the ability to shape the sonic journey and create specific selected frequencies and attenuation by making the environment as complex or pure as we determine in our design.

We use stimulus-based attention when we want to capture awareness and redirect focus because certain sound elements create triggers due to the lack of previous neural path routing. We create sonic environments by bringing attention to only what we supply; there is nothing for an audience to filter out, it is all on purpose.

When a sudden loud sound happens it makes the audience jump, and now if we add to it a low frequency tone, the brain automatically starts creating subconscious comparisons because the input limitation is the only information the audience is receiving. We control what they hear and how they hear it.

Low frequencies have their own special response in a human being. There is an evolutionary reason as to why low frequencies immediately make the brain suspect there is danger nearby. Some have equated that hearing high amplitude infrasonic aspects of an animal growl immediately forces humans into a fight or flight response. More importantly though, loud infrasonic sound is not only heard, it is felt. It vibrates the entire body including the internal organs. Even with the subtlest use of low frequency, you can create unease internally.

One of the most powerful tools for a sound designer is the ability to create the feeling of apparent silence. This is relative to the sound experience preceding and following the silence. It has its own emotional response because we are normally subconsciously subjected to constant background noise. The absence of sound increases attention and it can increase the ear’s sensitivity.

The increase of attention because of silence has the same effect as the increase of attention from a sudden loud noise with one exception; the detection of the absence of sound is slower. Perhaps this comes from the ‘silence before the storm’ feeling of impending danger noting that something is wrong because something is missing. Or it may come from how in nature insects stop making noise when a predator is near.

It would be safe to assume that fear governs survival, making you either stay and fight, or run away. And this learned evolutionary behavior comes from the need to survive and has dictated the commonality of our reaction to this type of sound.

There is no specific region of the brain that governs positive complex emotions derived from sound, making it more complicated to understand what triggers it. Positive emotions come from behavioral development. What makes one person excited could be boring to the next because it is less of a reactive emotion than one that is built from experience.

There are universal sonic elements that imply that loudness equals intensity, low frequency equals power and large size, slowness equals inertia, and quickness equals imminence. These elements common to sonic communication are exactly what sound designers use to guide an audience’s response to a specific storytelling journey.

Complex emotional response comes from complex stimuli, and in theatre the cleanest way to produce positive complex responses is to have multisensory integration. In simple terms, if the sound matches the visual in timing, amplitude, and tone, it produces a gratifying reaction even if the emotion is negative. This is because it activates more regions of the brain.

But let’s be clear about our specific medium, the most powerful and common stimulus for emotional response is sound. And this resides mostly in our hearing and association of music.

Music

Music draws attention to itself, particularly in music that is not sung. The meaning lies in the sound of the music. We listen reflectively to wordless music. It enlivens the body because it plays upon a full range of self-presence. Music is felt in its rhythms and movements. The filling of auditory space equates to losing distance as you listen, and therefore creates the impression of penetration.

The sound of music is not the sound of unattended things that we experience in our day-to-day existence. It is constructed and therefore curiously different. Each piece of music, each genre or style is a new language to experience. Music comes to be from silence and it shows the space of silence as possibility. The tone waxes and wanes at the discretion of the musician, and composers can explore the differences between being and becoming, and actuality and potentiality.

In his book Listening and Voice: Phenomenologies of Sound, Don Ihde remarks; “The purity of music in its ecstatic surrounding presence overwhelms my ordinary connection with things so that I do not even primarily hear the symphony as the sounds of instruments. In the penetrating totality of the musical synthesis it is easy to forget the sound as the sound of the orchestra and the music floats through experience. Part of its enchantment is in obliteration of things.”

Music can be simply broken down into the idea that it is a score with instrumentation. It is a type of performance. And if we look towards how we respond to music, it can be found in the definition of each unique performance and its effect upon us. A score will tell a musician how to perform a piece of music, and they can play it note-perfect. But one performance can vary from another because of the particular approach to the music by the individuals involved in creating it.

Because one cannot properly consider a work of art without considering it as meaningful, then the art of music must then be defined as having meaning. To define it as meaningful, would lead you to think that it is possible to actually say what a specific piece of music means. Everything is describable. But if you put into words the meaning of music, it almost seems to trivialize it because you can’t seem to capture the entirety of its meaning.

What we can communicate when defining music is its characteristic. Most use emotion to do this. For example, the music can be sad. But is it the particular phrase in the music that is sad; or is it the orchestration; or is it a totality of the entire piece that creates the analogy? Or more specifically, can it be the experience of hearing the music that creates the feeling of sadness? But even this is ambiguous because if you find a definition about how the music affected you emotionally, in describing it you still have to find what about the expression was effective to everyone else who experienced it.

We can try to describe music in the sense of its animation. The properties of its movement correspond to the movement properties of natural expression. A sad person moves slowly, and perhaps this is somehow mirrored in the piece of music. The dynamic character of the music can resemble human movement. Yet this alone would also not fully explain what it is to experience sad music because human movement implies change of location and musical movement does not.

When music is accompanying an additional form of expression as it does many times in theatre, you can evoke the same two reactions from an audience. The first is that the audience empathizes with the experience; they feel the sadness that the performance is expressing (within). And the second is that they can have a sympathetic reaction; they feel an emotion in response to what is being expressed (without).

What is so dynamic to music is that it can function as a metaphor to emotional life. It can possess qualities of a psychological drama, it can raise a charge towards victory, it can struggle with impending danger, or it can even try to recover a past lost. And it can describe both emotional and psychological states of mind. It is almost as if the experience is beyond the music even though we are not separated from it. Our inner response may divert in our imagination, but it is the music that is guiding us. That is why the experience of listening to music is often described as transporting us.

Music is perhaps an ordered succession of thoughts that could be described as an harmonic and thematic plan by the composer. And in this idea we supply an application of dramatic technique to music in order to create a conversation between the player and the listener. We use words like climax and denouement with a unity of tone and action. We even use the term character. We develop concepts and use music as a discourse to express metaphor. But at times intellectual content is almost coincidental. We did not set up this conversation to be heard intellectually, it is intended to be felt emotionally. The composer and musician direct our aural perception as the listener.

Feeling Music

It seems that music should lend itself to a scientific explanation of mathematics, frequency, composition of tone, amplitude, and time; however, there is so much more to music than what can be studied. There have been interpretations of different intervals in music and the reaction they elicit in the listener. The main determination of emotional response comes from whether the interval contains consonance or dissonance.

Consonance is a combination of notes that are harmonic because of the relationship of their frequencies. Dissonance is the tension that is created from a lack of harmony in the relationship of their frequencies. The specific determination of these terms has changed throughout history with the exploration of new ways of playing music and remains very difficult to come to consensus over.

What is known scientifically is that the listener reacts with a different response to intervals that contain consonance rather than intervals that are dissonant. As this goes further into the meaning of perception when we take into account the different emotional responses for intervals that are in a major key or in a minor key. This is why we tend to think of major keys as happy and minor keys as sad. However, keep in mind that both major and minor keys can contain consonance and dissonance.

Instrumentation

Every musical instrument creates its own harmonics based on the material of which it is made and how it is played (struck, bowed, forced air, etc.). And these harmonics add to the perception of consonance and dissonance. This is the reason why a violin will produce a different emotional response than that of a tuba. Each instrument has its own emotional tone; and when combined, the harmonics mix together in a way that creates a complexity of different emotional states.

When you place that complexity into a major or minor key using either consonance or dissonance and vary the speed and volume, you achieve music. A great composer uses each instrument’s emotional tone, whether alone or in concert with other instruments, to convey what they want their audience to feel.

Rhythm

Rhythm can be defined as a temporal sequence imposed by metre and generated by musical movement. And yet not all music is governed by metres and bar lines. Arabic music by example composes its time into cycles that are asymmetrically added together. The division of time by music is what we recognize as rhythm. For a sound designer it is important to note that the other aspect of rhythm is not about time; it is the virtual energy that flows through the music. This causes a human response that allows us to move with it in sympathy.

Each culture depends on its own interpretation of rhythm. A Merengue will have a much different feeling than a Viennese waltz. The sociological and anthropological aspects to rhythm vary greatly, and rhythm is the lifeblood of how subgroups of people express themselves. It can be as broad as the rhythms of different countries, to the minuteness of different regions that live in close proximity. Rhythm is a form of expression and can be extremely particular depending on who is creating it and who is listening to it.

Association of Music

Music is one of the strongest associational tools we can use as sound designers.

One of the most often remarked upon association is the use of John Williams’ theme music to the movie Jaws. A single tuba sounding very much alone slowly plays a heartbeat pattern that speeds up until it’s accelerated to the point of danger.

This is the perfect example of how repetition and the change of pace in music can cause your heart rate to speed up or slow down. It is why we feel calm with slower pieces of music, and excited with more complex or uneven tempos. The low infrasonic resonance of the frequencies created by the tuba imparts the feeling of danger approaching. When matched with the visual aspect of what you are trying to convey, a woman swimming alone in the ocean at night, the association is a very powerful tool to generate mood, feeling, and emotion.

In sound design you also have the ability to practice mind control where you can transport your audience to a time and place they have never been, or to a specific time and place that supports the production. Have you ever had a moment when you are driving in a car and a song from when you were younger comes on the radio and you are instantly flooded with the emotions, sights, smells, and feelings of when that song first hit you and what you associated with it in the past?

When you’re done reliving these sense memories, you’ve still maintain the action of driving safely and continuing the activity you were doing. But briefly your mind was taken over momentarily while your brain still functioned fully. The song controlled you temporarily. Music has the ability to transform your surroundings by changing the state of your mind.

Non-Musical Sound

The putting together of sound that is not musical, can be composed, and evoke feeling as well. Sonic construction of non-musical phrasing pushes the boundaries of conventional musical sounds. And if music lies within the realm of sound, it can be said that even the active engagement of listening to compound non-musical sound can evoke an emotional response. It can be tranquil, humorous, captivating, or exciting. The structure and instrumentation of the composition is what will give the impression of metaphor.

We use non-musical sound a great deal in sound design as we are always looking for new ways of conveying meaning. And depending on the intention and thematic constructs of a piece of theatre, we create soundscapes from atypical instrumentation that are meant to evoke a feeling or mood when heard.

Technological Manipulation of Music and Sound

As far as the technology of amplification and manipulation of sound, I think of it this way: recorded music vs. playing live music is similar to printed words vs. the act of writing on a page. Music has been made universal with prolific genres and styles and it is pervasive in our society. And recorded music allows for the distribution of music globally.

At the beginning of recorded music, it lacked a purity of sound no matter how good the reproduction. It was recording the live playing without thought to the auditory focus, fields, or horizons. Now, we approach recorded music with intention knowing it is its own form of music that is separate from live music. The electronic elements of music no longer get in the way, they aid in creating a more dynamic production of music.

With a shift in musical technology, a deeper shift of insight and creativity can occur. There can be infinite flexibility and possibilities. Just as in the past, instruments needed to be invented, developed, played, and then tuned to create music. The same applies to technology. And although humans have been experiencing and producing music in diverse cultures since ancient times, this is but the next step in how we create music as artists.

Music’s language is based on who is exercising control. The composer, conductor, and musician exercises control over pitch, timbre, dynamics, attack, duration, and tempo. There are attributes to the voice of the instrument and to the space within which it is played. The control is a manipulation of sonic events and their relationship to each other.

Mixing engineers manipulate the rules that influence these relationships within a subtle to broad range. And those composers and musicians who implement virtual instruments into their writing have a wider range of rules than could be historically produced in the past.

The creative application of spatial and temporal rules can be aesthetically pleasing. When sound or music elicits an aural image of space in support of the visual aspects, like it can in theatre, space is not necessarily a real environment. And it can be exciting when differences in aural and visual space coexist simultaneously.

Let’s look at the manipulation of electric components of delivering sound. In the past, audio engineers would change, adjust, add, or remove components from their signal-processing algorithms. These would create ‘accidental’ changes in the sound. The engineers would refine their understanding of the relationship between the parameters and algorithms of their equipment with the sound that was produced.

Now, aural artists with audio engineers can create possibilities of imaginative sound fields. This highlights the interdependence between the artists, both aural and engineer, with science. No matter how abstract or technical the specifics of the sound presentation, each complex choice has real implications to how it will sound.

The mixing engineer can function as musician, composer, conductor, arranger, and aural architect. They can manipulate the music and the space wherein it lives and then distribute it to an audience. There are rarely any notations in musical compositions that contain specifics regarding spatial acoustics, and engineers have taken on the traditional responsibilities of the acoustic architect.

When an audio engineer designs an artificial reverberator to achieve other than natural reverberation in a space, the audio mixer then adjusts the parameters, and together they replace the acoustic architect who built the theatre. Physical naturalness becomes then an unnecessary constraint and it is replaced through intention by artistic meaningfulness.

There is an art to engineering and mixing that should be explored to its fullest. What is possible with technology and the manipulation of sound to create new aural landscapes can be stretched to the limits of imagination. All of it, done with the intended desired affect, can have a strong impression upon an audience.

Overall

As you can see, there is great depth to how human beings experience sound and how that perception gives way to meaning and emotion. The intricacies of how this happens exist mostly subconsciously. And when creating theatre art, whose purpose is to evoke deliberate emotional response, we must understand how to construct sound and music to support the intentions outlined for the production.

We take into account how the body interprets vibration, perceives properties, creates meaning, and responds emotionally to sound and music. The science is the grounding layer to what then becomes the metaphysical. We move then into psychology of the individual and group, and how that influences the perceived effect. Who we are internally and externally defines the response to sound around us. And the next added layer on top of science is the cultural aspect and anthropology to how we as individuals relate to others in our environments.

We, as sound designers, consider all of this in order to create richness of design, reality of place, illusion of metaphorical ideas, and emotional content. Successful artistry in sound design is both instinctual and researched. And it is based on human reaction.